EDITORIAL GUIDELINES

Mr. Jules Bonnet only standardizes in his edition of Calvin's French correspondence the spelling of the words: long s (ſ) for regular s, including the dissimilation of i and j, as well as the addition of basic punctuation marks in the form of apostrophes and dashes for the most part. Therefore, the source text is a “normalized” text when it comes to normalization of variant letter forms and basic punctuation, development of abbreviations and capitalization. The only other modern feature constitutes the grave accent on the preposition à to distinguish it from the verb conjugation of avoir, the development of vowel nasalization by replacing the tilde with the corresponding nasal consonant, and the substitution of the final z in words, standard in 16th century French, for the modern s (e.g., nouvellez becomes nouvelles). The rest remains in old French according to 16th century practices in terms of spelling, vocabulary and turns of phrases, which are generally heavier and more complex, and the tone is usually more formal as compared to modern standards. There is also the use of verbal tenses, such as the perfect past and the imperfect tense in the subjunctive mode and the second form of the past conditional, which are obsolete and rarely used in modern French. For the translation into modern French, the goal is to remain as faithful to the original text as possible. Consequently, only the spelling will be completely normalized according to modern standards, including the addition of all the accents, e.g. ayde becomes aide, oster becomes ôter, soubz becomes sous, bruict becomes bruit, etc. As for vocabulary and turns of phrases, they will not be touched for the most part, except for those instances when the modern equivalent may be needed in order to shed light over an obscure phrase or passage, leading to a better understanding. For instance, partement becomes départ, fort estonné becomes fort étonné, avoir/être mestier becomes avoir besoin, tant y a becomes pour conclure.

A list of basic modernization patterns was made in order to aid the overall encoding process. After analysis I reached the conclusion that these basic patterns correspond to pronouns, connecting words and discourse markers, and in this specific corpus some frequently used verbal forms whose spelling has changed over the centuries such as être, prendre, vouloir, voir, connaitre and their lexical derivatives, and the morphological form of the imperfect tense which has also changed –from the -ois or -oi(en)t ending to the modern -ais or -ai(en)t ending, depending on the person of the conjugation. E.g., estoit for était–.

Regarding the translation into Spanish, it will remain strictly faithful to the original in language, tone and style. Vous will be translated into usted and conjugated accordingly, since it was the usual practice in the Spanish speaking world at the time, thus matching the tone used by John Calvin in the correspondence. Names of persons and geographical places, pseudonyms and the like will not change except when they already have a widely recognized equivalent in Spanish; such is the case of Jean Calvin (Juan Calvino in Spanish, John Calvin in English), Genève (Ginebra in Spanish, Geneva in English).

The contextual notes will generally be found in the letter where the term was mentioned or implied; although some significant ones may also be indexed. The Latin fragments Calvin is sometimes so fond of using in his correspondence will be translated into modern French and into Spanish respectively. Editorial notes and translator’s notes might be added if and when appropriate, particularly when it pertains to the choices made regarding a specific sentence, phrase or paragraph. The latter will be indicated as such with an editorial note or translator’s note mention in the applicable language of the version in question. The TEI encoding follows the standard global guidelines in every aspect in order to achieve as high a level of interoperability of the data as possible.

The persName tags will include the attributes of @type and @ref when only part of the name appears, such as the surname, and the person can be identified. Example:

If these conditions do not apply, the persName tag will include no attributes:

Every fragment of text that needs to be highlighted, such as words in a foreign language –like Latin–, the titles of books, references and quotations, should be encoded as follows:

As for superscripts, they should be encoded by giving the value superscript to the @rend attribute:

The notes should be encoded as follows in order for the XSLT stylesheet to work correctly:

Choice tags can be included anywhere in the body, except inside the note tags.

PROCESSING THE LETTERS

When it came to the processing of the letters, it ended up being a series of decisions to be made in order to be as efficient and as comprehensive as possible both in approach and regarding Digital Humanities practices. The source text had to be analyzed and the original manuscripts located for the entire objective of this edition to be fulfilled.

Once the Library of Geneva confirmed that all documents would be freely accessible online, the most immediate task was finishing the census and sorting process of the manuscripts and copies in existence I had started at the Library of Geneva. The objective was to have a catalogue of the original sources available both there at the library and online elsewhere.

Once I had a fairly accurate catalogue of all the letters whose original manuscript or manuscript copy was available, it was time to make a selection, since I knew I would not have the time to process them all in a few months. I favored those whose original manuscripts where readily accessible and ready to display. Except, out of the 66 original manuscripts accounted for, over 40 letters featured two recurring recipients: Mr. and Mrs. de Falais; and it was still too many. Hence, I settled for ten originals and five manuscript copies spaced out over the period of the first volume of Jules Bonnet’s edition –from 1538 to 1554– and featuring different recipients such as Edward VI, the king of England at the time and son of Henry VIII, the duchess of Ferrara as well as a priest during a period of black plague in Geneva, and important events such as the last letter to Mr. de Falais in which he severs their years-long association and friendship.

The idea was to find a way to automatize the process as much as possible, to be able to produce several outputs, especially an HTML output for the website and a PDF printed format, from a single XML-encoded file.

At first, I thought of using OxGarage – a web service that manages the transformation of documents between a variety of formats; mainly, in this case, from Word (.docx) to interoperable formats such as TEI (XML-TEI) and/or HTML, but also from CSV and JSON–, and then Oxigen, which is one of the best XML editors available (and the one being used in the master’s program), since it provides a comprehensive set of authoring and development tools to curate and clean the resulting transformation file.

However, I realized that using this solution would be messier and more time-consuming than using an XML-TEI model template to be “fed” with the data. Usually, the transformation of a Word document to an encoded format is fairly generic and the result may not be uniform for all files; it also includes styling information that was not wanted at that stage. The XML file is to be the origin, open-source and reusable file from which other formats and versions can be generated through dynamic code like that of the Max publishing engine developed by the Pôle Document Numérique.1

In short, I wanted a more efficient way as well as control over what I was doing. Which proved to be a must later on when I created the XML-TEI model through the encoding of the first letter in the collection. When I tried using OxGarage to do the transformation to HTML, the result was not satisfactory and the editing time-consuming. Besides, both for the HTML and the PDF formats, the

Once I had the model for the encoded files and the functional code for the whole comparative interface, I organized the data according to the input formats and encoded structure needed: JavaScript for the OpenSeadragon viewer used for displaying the facsimiles of the manuscripts on the website through ARK (Archival Resource Key) links – which allow to call the image directly from the library website servers via an URL, and HTML for the library bibliographical records, snippets of which were taken from the library websites themselves and then enriched with the library catalogue information.

EDITORIAL PROCESS AND DIGITAL TOOLS

This critical edition will be using a series of editorial, translation and digital tools to achieve the wanted results.

The source text, the first volume of Jules Bonnet’s 1854 edition of Calvin’s French correspondence provided by Gallica, one the major digital libraries accessible for free online, can be downloaded in various formats, included raw text. This raw text is automatically generated by an optical character recognition (OCR) program with an estimated recognition rate of 97%. The letters are then processed and corrected in a procedure similar to that of a diplomatic transcription against the scanned copy in PDF format, which will also be used in the final version of the edition website as the source text.

Afterwards, Bonnet’s regularized transcription of the original 16th century manuscripts and copies are translated into modern French by directly encoding them in the Visual Studio Code editor following the XML-TEI model taken from the Roma ODD (One Document Does It All) editor. The model was then adapted to include the correspDesc and correspContext tags developed by the Consortium CAHIER for correspondence as well as other metadata tags that belong in the teiHeader. In the body, the opener, salute and closer tags, also from the Consortium CAHIER, are added to complete the structure of the encoded letter.

Then, the text of the letter as well as date, place and recipient data are introduced into the model including the notes, both those from Jules Bonnet’s edition and my own enriched ones. The modernization is encoded into choice tags with the corresponding orig tag for the original text and the reg tag for the regularized or modernized word or phrase.

The general structure of what was explained above looks like this:

Both the modernization and the translation into Spanish are carried out with the help of dictionaries and old French documentation such as the DMF: Dictionnaire du moyen français (Middle French Dictionary), the Cairn.info Portal, the Littré Dictionnary, the Petit Robert French dictionary and some other online articles and tools.

The DMF is one of the most useful tools among them, particularly regarding old French. The latter is produced and published by ATILF, a public research laboratory in humanities and social sciences specialized in language sciences that is part of the CNRS (National Center for Scientific Research, according to the French acronym) and the University of Lorraine. This dictionary possesses a massive database of old French terms, including examples and a text corpus. It will find the lemma for the word entered as well as the different versions or forms of it over the years and contexts according to the text corpus incorporated in a database containing 219 open access texts and a corpus of 388 medieval texts before 1550 requiring a subscription2. A lemmatization formulary, a lemmatization platform and a corpus search can be found among its main tools.

The encoding of the modern French version inside the encoding environment is somewhat sped up by the use of a list of recurrent terms already encoded:

By using the search and replace tool of the code editor, several instances of a recurring word or phrase can be done at once. Of course, the rest of modifications that do not conform to the pattern and represent new instances are done manually. However, the list of “choice tag patterns” –as I call it– can be enriched and constantly updated as the letters are being processed and translated, since new patterns, common to some of the letters, emerge from time to time.

Once the letters have been translated into modern French, they are then translated into Spanish while maintaining the original register and style. The Deepl online translator is used at this time to process the bulk of the text to later manually edit and proofread the computer-assisted translation in order to produce a quality rendition, faithful to the original manuscripts of Calvin’s French letters, for Spanish speakers.

The translation into Spanish, once ready, will be introduced into the same encoded model as that used for the modernization, minus the choice tags.

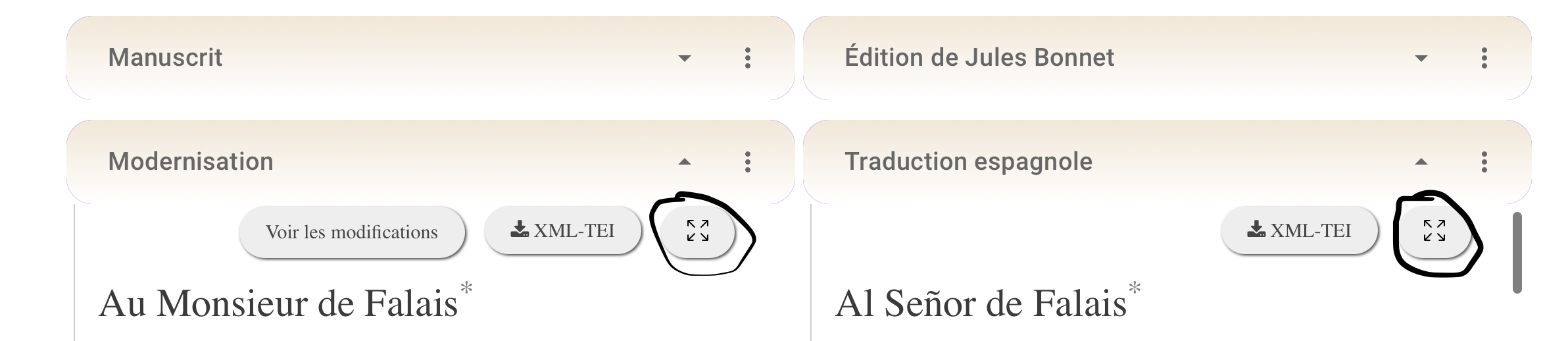

The transformation into the HTML format for the website is made automatically through a piece of JavaScript code I adapted from W3School3 to transform XML to XHTML in the Browser. The code basically takes the XML file and the XSLT file and applies the transformation specifications contained in the XSLT file to display it inside the HTML element indicated on the page. I created three XSLT files –which are basically the same, except for very subtle differences–: one for the clean modern French version, another for the version where one can see the modernization process (the changes made) and another for the translation into Spanish. This allows for a semiautomatic process that produces two output formats from a single XML file –the PDF can be produced by clicking on the button to enlarge the version of the letter one wants to print so that it takes up the entire screen in order to print it with the Print option every Browser possesses.

The critical edition is hosted on a server provided by the Université de Franche-Comté, in particular by the Rare Books and Digital Humanities master program. It runs on a WordPress content management system (CMS).

All XML and XSLT transformation files are uploaded onto the server for the content to be published, as explained above, once the aforementioned process is complete. The source XML file is available for download. The terms for reusing the content are stated on the Legal Mentions page.

In addition, each letter will be accessible from various places on the website, that is, through a series of indexes and visualization tools such as TimeLineJs and StoryMapJs developed by Northwestern University Knight Lab.

References

1 “Outils | Maison de La Recherche En Sciences Humaines,” accessed June 23, 2022, https://www.unicaen.fr/recherche/mrsh/document_numerique/outils.

2 “Dictionnaire Du Moyen Français (1330-1500) Les Textes.” n.d. Dictionnaire électronique. Dictionnaire Du Moyen Français (1330-1500). Accessed August 21, 2021. http://zeus.atilf.fr/dmf/.

3 “XSLT on the Client,” accessed June 23, 2022, https://www.w3schools.com/xml/xsl_client.asp.

Source: Yanet Hernández Pedraza. “New Digital Critical Edition of John Calvin’s Collection of French Correspondence (Selection).” Master’s Thesis, Université de Franche-Comté (2022), 34.